Monday, 29 March 2021

Sunday, 28 March 2021

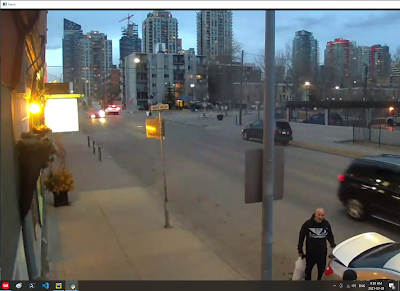

opencv 25 background subtraction | motion detection

background subtraction detects movement

#main.py

import numpy as np

import cv2

cap = cv2.VideoCapture("assets/live cam.mp4")

background_subtract = cv2.createBackgroundSubtractorMOG2()

while True:

ret, frame = cap.read()

width = int(cap.get(3))

height = int(cap.get(4))

mask = background_subtract.apply(frame)

cv2.imshow('frame', frame)

cv2.imshow('background subtract', mask)

if cv2.waitKey(1) == ord('q'):

break

if cv2.waitKey(1) == ord('p'):

# wait until any key is pressed

cv2.waitKey(-1)

cap.release()

cv2.destroyAllWindows()

reference:

Saturday, 27 March 2021

opencv 24 houghcircle | circle detection

houghcircle detection after blurring gray image

blur

#main.py

import numpy as np

import cv2

cap = cv2.VideoCapture("assets/plate.mp4")

while True:

ret, frame = cap.read()

width = int(cap.get(3))

height = int(cap.get(4))

gray = cv2.cvtColor(frame, cv2.COLOR_BGR2GRAY)

blur = cv2.medianBlur(gray, 5)

circles = cv2.HoughCircles(blur, cv2.HOUGH_GRADIENT, 1, 50, param1=50,

param2=50, minRadius=10, maxRadius=100)

if not circles is None:

detected_circles = np.uint16(np.around(circles))

for (x, y, r) in detected_circles[0, :]:

cv2.circle(frame, (x, y), r, (0, 255, 0), 2)

cv2.imshow('frame', frame)

cv2.imshow('gray', gray)

cv2.imshow('blur', blur)

if cv2.waitKey(1) == ord('q'):

break

if cv2.waitKey(1) == ord('p'):

# wait until any key is pressed

cv2.waitKey(-1)

cap.release()

cv2.destroyAllWindows()

reference:

Friday, 26 March 2021

Thursday, 25 March 2021

Wednesday, 24 March 2021

opencv 23 hough transform | line detection

hough transform is applied after canny edge detection, lines are detected

canny edge

#main.py

import numpy as np

import cv2

cap = cv2.VideoCapture("assets/Tokyo night drive.mp4")

while True:

ret, frame = cap.read()

width = int(cap.get(3))

height = int(cap.get(4))

gray = cv2.cvtColor(frame, cv2.COLOR_BGR2GRAY)

edges = cv2.Canny(gray, 254, 255, apertureSize=3)

lines = cv2.HoughLinesP(edges, 1, np.pi/180, 100, minLineLength=100, maxLineGap=10)

if not lines is None:

for line in lines:

x1, y1, x2, y2 = line[0]

cv2.line(frame, (x1, y1), (x2, y2), (0, 0, 255), 5)

cv2.imshow('frame', frame)

cv2.imshow('gray', gray)

cv2.imshow('canny edge', edges)

if cv2.waitKey(1) == ord('q'):

break

if cv2.waitKey(1) == ord('p'):

# wait until any key is pressed

cv2.waitKey(-1)

cap.release()

cv2.destroyAllWindows()

reference:

Tuesday, 23 March 2021

opencv 22 histogram

red channel

green channel

blue channel

histogram

#main.py

import numpy as np

import cv2

from matplotlib import pyplot as plt

mountain1 = cv2.imread('assets/mountain2.jpg')

b, g, r = cv2.split(mountain1)

cv2.imshow('img', mountain1)

cv2.imshow('b', b)

cv2.imshow('g', g)

cv2.imshow('r', r)

fig, axs = plt.subplots(1, 3)

axs[0].hist(b.ravel(), 256, [0, 256], color='b')

axs[0].set_title('blue')

axs[1].hist(g.ravel(), 256, [0, 256], color='g')

axs[1].set_title('green')

axs[2].hist(r.ravel(), 256, [0, 256], color='r')

axs[2].set_title('red')

for ax in axs.flat:

ax.set(xlabel='pixel value', ylabel='count')

# Hide x labels and tick labels for top plots and y ticks for right plots.

for ax in axs.flat:

ax.label_outer()

#plt.hist(b.ravel(), 256, [0, 256])

#plt.hist(g.ravel(), 256, [0, 256])

#plt.hist(r.ravel(), 256, [0, 256])

fig.suptitle('image histogram')

plt.show()

cv2.waitKey(0)

cv2.destroyAllWindows()

reference:

matplotlib import ft2font error

pip uninstall matplotlib

pip install -U matplotlib==3.2.0rc1

matplotlib multiple plot

Monday, 22 March 2021

opencv 21 detect geometric shapes

original image

apply threshold

draw contour and text

#main.pyimport numpy as np

import cv2

img = cv2.imread('assets/geometric shapes.jpg')

gray = cv2.cvtColor(img, cv2.COLOR_BGR2GRAY)

_, thresh = cv2.threshold(gray, 70, 80, cv2.THRESH_BINARY)

contours, _ = cv2.findContours(thresh, cv2.RETR_TREE, cv2.CHAIN_APPROX_NONE)

for contour in contours:

if cv2.contourArea(contour) < 2000:

continue

#polyDP (contour, accuracy, shape closed?)

approx = cv2.approxPolyDP(contour, 0.01 * cv2.arcLength(contour, True), True)

cv2.drawContours(img, [approx], 0, (255, 255 , 255), 1)

#print(approx)

x = approx.ravel()[0]

y = approx.ravel()[1] - 15

if len(approx) == 3:

cv2.putText(img, 'triangle', (x, y), cv2.FONT_HERSHEY_COMPLEX, 0.5, (255, 255, 255))

elif len(approx) == 4:

x, y, w, h = cv2.boundingRect(approx)

aspectRatio = float(w)/h

if aspectRatio >= 0.95 and aspectRatio <= 1.05:

cv2.putText(img, 'square', (x, y), cv2.FONT_HERSHEY_COMPLEX, 0.5, (255, 255, 255))

else:

cv2.putText(img, 'rectangle', (x, y), cv2.FONT_HERSHEY_COMPLEX, 0.5, (255, 255, 255))

elif len(approx) == 5:

cv2.putText(img, 'pentagon', (x, y), cv2.FONT_HERSHEY_COMPLEX, 0.5, (255, 255, 255))

elif len(approx) == 6:

cv2.putText(img, 'hexagon', (x, y), cv2.FONT_HERSHEY_COMPLEX, 0.5, (255, 255, 255))

elif len(approx) == 7:

cv2.putText(img, 'heptagon', (x, y), cv2.FONT_HERSHEY_COMPLEX, 0.5, (255, 255, 255))

elif len(approx) == 8:

cv2.putText(img, 'octagon', (x, y), cv2.FONT_HERSHEY_COMPLEX, 0.5, (255, 255, 255))

elif len(approx) == 9:

cv2.putText(img, 'nonagon', (x, y), cv2.FONT_HERSHEY_COMPLEX, 0.5, (255, 255, 255))

elif len(approx) == 10:

cv2.putText(img, 'star', (x, y), cv2.FONT_HERSHEY_COMPLEX, 0.5, (255, 255, 255))

else:

cv2.putText(img, 'circle', (x, y), cv2.FONT_HERSHEY_COMPLEX, 0.5, (255, 255, 255))

cv2.imshow('threshold', thresh)

cv2.imshow('contours', img)

cv2.waitKey(0)

cv2.destroyAllWindows()

reference:

Sunday, 21 March 2021

opencv 20 motion detection

#main.py

import cv2

import numpy as np

cap = cv2.VideoCapture("assets/live cam.mp4")

_, frame1 = cap.read()

_, frame2 = cap.read()

i = 0

while cap.isOpened():

diff = cv2.absdiff(frame1, frame2)

gray = cv2.cvtColor(diff, cv2.COLOR_BGR2GRAY)

blur = cv2.GaussianBlur(gray, (5, 5), 0)

_, thresh = cv2.threshold(blur, 20, 255, cv2.THRESH_BINARY)

dilated = cv2.dilate(thresh, None, iterations=3)

contours, _ = cv2.findContours(dilated, cv2.RETR_TREE, cv2.CHAIN_APPROX_SIMPLE)

#cv2.drawContours(frame1, contours, -1, (0, 0, 255), 2)

for contour in contours:

(x, y, w, h) = cv2.boundingRect(contour)

if cv2.contourArea(contour) < 2000:

continue

cv2.rectangle(frame1, (x, y), (x+w, y+h), (0, 0, 255), 5)

cv2.imshow('feed', frame1)

i += 1

name = 'motion detection/img' + str(i) + '.jpg'

cv2.imwrite(name, frame1)

frame1 = frame2

_, frame2 = cap.read()

if cv2.waitKey(1) == ord('q'):

break

if cv2.waitKey(1) == ord('p'):

# wait until any key is pressed

cv2.waitKey(-1)

cap.release()

cv2.destroyAllWindows()

------------------------------------

#screenshot.py

import pyscreenshot as ImageGrab

for i in range(200):

im = ImageGrab.grab(bbox=(100, 500, 1900, 1500)) # X1,Y1,X2,Y2

name = 'screenshot/img' + str(i) + '.jpg'

im.save(name)

reference:

screenshot

pip install pyscreenshot

pip install image

live cam

Saturday, 20 March 2021

opencv 19 contours

adaptive threshold

contour

#main.pyimport numpy as np

import cv2

cap = cv2.VideoCapture("assets/Santa Barbara.mp4")

while True:

ret, frame = cap.read()

width = int(cap.get(3))

height = int(cap.get(4))

gray = cv2.cvtColor(frame, cv2.COLOR_BGR2GRAY)

#adaptive threshold (frame, max pixel value, adaptive method, threshold type, neighbour block size, c constant)

#th1 = cv2.adaptiveThreshold(gray, 255, cv2.ADAPTIVE_THRESH_MEAN_C, cv2.THRESH_BINARY, 11, 2)

th2 = cv2.adaptiveThreshold(gray, 255, cv2.ADAPTIVE_THRESH_GAUSSIAN_C, cv2.THRESH_BINARY, 11, 2)

contours, hierarchy = cv2.findContours(th2, cv2.RETR_TREE, cv2.CHAIN_APPROX_NONE)

contour_img = np.uint8(np.full((height, width, 3), 255))

cv2.drawContours(contour_img, contours, -1, (0, 0, 0), 1)

cv2.imshow('frame', frame)

cv2.imshow('gray', gray)

cv2.imshow('threshold', th2)

cv2.imshow('contourss', contour_img)

if cv2.waitKey(1) == ord('q'):

break

if cv2.waitKey(1) == ord('p'):

# wait until any key is pressed

cv2.waitKey(-1)

cap.release()

cv2.destroyAllWindows()

reference:

opencv 18 blending image with pyramids

stacked image

blended image

image pyramid (same image with different resolutions)

Laplacian pyramid (low resolution image + edge detection at different resolution)

#main.pyimport numpy as np

import cv2

mountain1 = cv2.imread('assets/mountain1.jpg')

mountain1 = cv2.resize(mountain1, (1200, 800))

#print(mountain1.shape)

mountain2 = cv2.imread('assets/mountain2.jpg')

mountain2 = cv2.resize(mountain2, (1200, 800))

#print(mountain2.shape)

mountain_stack = np.hstack((mountain1[:, :700], mountain2[:, 700:]))

#generate Gaussian pyramid for mountain1

mountain1_copy = mountain1.copy()

mountain1_pyramid = [mountain1_copy]

for i in range(6):

#decrease image resolution

mountain1_copy = cv2.pyrDown(mountain1_copy)

mountain1_pyramid.append(mountain1_copy)

#generate Gaussian pyramid for mountain2

mountain2_copy = mountain2.copy()

mountain2_pyramid = [mountain2_copy]

for i in range(6):

#decrease image resolution

mountain2_copy = cv2.pyrDown(mountain2_copy)

mountain2_pyramid.append(mountain2_copy)

#generate Laplacina Pyramid for mountain1

mountain1_copy = mountain1_pyramid[5]

lp_mountain1 = [mountain1_copy]

for i in range(5, 0, -1):

resolution_up = cv2.pyrUp(mountain1_pyramid[i])

shape = mountain1_pyramid[i-1].shape

resolution_up = cv2.resize(resolution_up, (shape[1], shape[0]))

laplacian = cv2.subtract(mountain1_pyramid[i-1], resolution_up)

lp_mountain1.append(laplacian)

#generate Laplacina Pyramid for mountain2

mountain2_copy = mountain2_pyramid[5]

lp_mountain2 = [mountain2_copy]

for i in range(5, 0, -1):

resolution_up = cv2.pyrUp(mountain2_pyramid[i])

shape = mountain2_pyramid[i - 1].shape

resolution_up = cv2.resize(resolution_up, (shape[1], shape[0]))

laplacian = cv2.subtract(mountain2_pyramid[i-1], resolution_up)

lp_mountain2.append(laplacian)

#stack laplacian images

lp_pyramid = []

for mount1_lap, mount2_lap in zip(lp_mountain1, lp_mountain2):

row, col, ch = mount1_lap.shape

#print(mount1_lap.shape)

lp_stack = np.hstack((mount1_lap[:, :int(col*7/12)], mount2_lap[:, int(col*7/12):]))

#print(lp_stack.shape)

lp_pyramid.append(lp_stack)

#reconstruct stacked image

#reconstruct[0] has a low resolution stacked image

#reconstruct[1] is generated by adding scaled up reconstruct[0] and corresponding laplacian edges

#reconstruct[2] is generated by adding scaled up reconstruct[1] and corresponding laplacian edges

reconstruct = lp_pyramid[0]

for i in range(1, 6):

reconstruct = cv2.pyrUp(reconstruct)

#print(reconstruct.shape)

#print(lp_pyramid[i].shape)

shape = lp_pyramid[i].shape

reconstruct = cv2.resize(reconstruct, (shape[1], shape[0]))

reconstruct = cv2.add(lp_pyramid[i], reconstruct)

cv2.imshow('mountain1', mountain1)

cv2.imshow('mountain2', mountain2)

cv2.imshow('mountain stack', mountain_stack)

"""

for i in range(6):

image_name = 'mountain2 pyramid' + str(i)

cv2.imshow(image_name, mountain2_pyramid[i])

for i in range(5, -1, -1):

image_name = 'mountain laplacian ' + str(i)

cv2.imshow(image_name, lp_mountain1[i])

"""

cv2.imshow('blended image', reconstruct)

cv2.waitKey(0)

cv2.destroyAllWindows()

reference:

Thursday, 18 March 2021

opencv 17 canny edge detection

gray scale

canny edge detection

#main.py

import numpy as np

import cv2

cap = cv2.VideoCapture("assets/Santa Barbara.mp4")

high = 200

low = 100

trackbars_img = np.uint8(np.full((50, 500, 3), 255))

cv2.imshow('trackbars', trackbars_img)

def high_change(x):

global high

high = x

def low_change(x):

global low

low = x

cv2.createTrackbar('low', 'trackbars', 100, 150, low_change)

cv2.createTrackbar('high', 'trackbars', 200, 255, high_change)

while True:

ret, frame = cap.read()

width = int(cap.get(3))

height = int(cap.get(4))

gray = cv2.cvtColor(frame, cv2.COLOR_BGR2GRAY)

canny = cv2.Canny(gray, low, high)

cv2.imshow('frame', frame)

cv2.imshow('gray', gray)

cv2.imshow('canny', canny)

if cv2.waitKey(1) == ord('q'):

break

if cv2.waitKey(1) == ord('p'):

# wait until any key is pressed

cv2.waitKey(-1)

cap.release()

cv2.destroyAllWindows()

reference:

Wednesday, 17 March 2021

opencv 16 image gradients | edge detection

An image gradient is a directional change in the intensity or color in an image.

gray scale

Laplacian transform, edges are detected

sobelX detects edge along Y axis

sobelY detects edge along X axis

sobelXY detects all edges

#main.pyimport numpy as np

import cv2

cap = cv2.VideoCapture("assets/Santa Barbara.mp4")

while True:

ret, frame = cap.read()

width = int(cap.get(3))

height = int(cap.get(4))

gray = cv2.cvtColor(frame, cv2.COLOR_BGR2GRAY)

lap = cv2.Laplacian(gray, cv2.CV_64F, ksize=3)

lap = np.uint8(np.absolute(lap))

sobelX = cv2.Sobel(gray, cv2.CV_64F, 1, 0)

sobelY = cv2.Sobel(gray, cv2.CV_64F, 0, 1)

sobelX = np.uint8(np.absolute(sobelX))

sobelY = np.uint8(np.absolute(sobelY))

sobelCombined = cv2.bitwise_or(sobelX, sobelY)

cv2.imshow('frame', frame)

cv2.imshow('gray', gray)

cv2.imshow('laplacian', lap)

cv2.imshow('sobelX', sobelX)

cv2.imshow('sobelY', sobelY)

cv2.imshow('sobelCombined', sobelCombined)

if cv2.waitKey(1) == ord('q'):

break

if cv2.waitKey(1) == ord('p'):

# wait until any key is pressed

cv2.waitKey(-1)

cap.release()

cv2.destroyAllWindows()

reference:

Tuesday, 16 March 2021

opencv 15 smoothing | blurring

adaptive threshold

blur (2d convolution)

2d convolution kernel

gaussian blur removes high frequency noise

gaussian convolution kernel

median blur removes salt and pepper noise

bilateral blur preserves edges

#main.py

import numpy as np

import cv2

cap = cv2.VideoCapture("assets/Santa Barbara.mp4")

while True:

ret, frame = cap.read()

width = int(cap.get(3))

height = int(cap.get(4))

gray = cv2.cvtColor(frame, cv2.COLOR_BGR2GRAY)

#adaptive threshold (frame, max pixel value, adaptive method, threshold type, neighbour block size, c constant)

th1 = cv2.adaptiveThreshold(gray, 255, cv2.ADAPTIVE_THRESH_MEAN_C, cv2.THRESH_BINARY, 11, 2)

#th2 = cv2.adaptiveThreshold(gray, 255, cv2.ADAPTIVE_THRESH_GAUSSIAN_C, cv2.THRESH_BINARY, 11, 2)

kernel = np.ones((5, 5), np.float32)/25

#conv = cv2.filter2D(th1, -1, kernel)

blur = cv2.blur(th1, (5,5))

#gaussianBlur (frame, kernel, sigma)

#designed to remove high frequency noise

gblur = cv2.GaussianBlur(th1, (5, 5), 0)

#median method designed to remove salt and pepper noise

median = cv2.medianBlur(th1, 5)

#bilateralFilter preserve edges

bilateralFilter = cv2.bilateralFilter(th1, 9, 75, 75)

cv2.imshow('frame', frame)

cv2.imshow('threshold', th1)

cv2.imshow('convolution', blur)

cv2.imshow('gaussian', gblur)

cv2.imshow('median', median)

cv2.imshow('bilater', bilateralFilter)

if cv2.waitKey(1) == ord('q'):

break

if cv2.waitKey(1) == ord('p'):

# wait until any key is pressed

cv2.waitKey(-1)

cap.release()

cv2.destroyAllWindows()

reference: